Garmin's nüvi 700 series brings two exciting new features -- multi-destination routing and, "Where am I?/Where's my car?" -- to its popular pocket-sized GPS navigator lineup. As with all nüvis, you get Garmin reliability, the fast satellite lock of an integrated high-sensitivity receiver, a slim, pocket-sized design with a gorgeous display, an easy, intuitive interface, and detailed NAVTEQ maps for the United States, Canada and Puerto Rico with more than 6 million name-searchable points of interest. All of the 700-series navigators also feature a rich array of features including spoken directions in real street names, MP3 player and photo viewer, and an FM transmitter that will play voice prompts, MP3s, audio books, and more, directly through your vehicle's stereo system.The nüvi 760 and 770 add integrated traffic receivers and Bluetooth capability for hands-free calling. The nüvi 770 adds maps for Europe. The nüvi 780 adds enhanced MSN direct content capability..

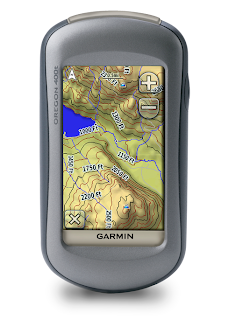

Garmin Oregon 400t Touch-Screen Handheld GPS Receiver with US Topo Maps

Unlike the other two GSP devices in our Top Picks, this one is an outdoor GPS devices and this high-end model features a touch screen. Oregon 400t is the best off the road GPS available;, its tough, 3-inch diagonal, sunlight-readable, color, touchscreen display is the highest of the high end with regard to these kinds of devices. Its easy-to-use interface means you'll spend more time enjoying the outdoors and less time searching for information. The Garmin Oregon 400t is rugged built for hard impacts and can be submerged in water up to a depth of a meter for half an hour without damage. It features wireless communication and the 400t is preloaded with topographic maps, perfect for hiking and biking. It also can be upgraded with additional maps, including the City Navigator, which adds street-level, turn-by-turn automotive navigation for the U.S., Canada, and Puerto Rico along with a six-million-entry points-of-interest (POI) database. With Oregon 400t you can share your waypoints, tracks, routes and geocaches wirelessly with other Oregon and Colorado users. Now you can send your favorite hike to your friend to enjoy or the location of a cache to find. Sharing data is easy. Just touch "send" to transfer your information to similar units. Oregon 400t supports Geocaching.com GPX files for downloading geocaches and details straight to your unit. No more manually entering coordinates and paper print outs! Simply upload the GPX file to your unit and start hunting for caches. Show off the photos of your trip instantly. Though Garmin does not reveal which GPS chipset is used in the device, the receiver highly sensitive, and satellite acquisition time is rapid. With its high-sensitivity, WAAS-enabled GPS receiver and HotFix satellite prediction, Oregon 400t locates your position quickly and precisely and maintains its GPS location even in heavy cover and deep canyons. The advantage is clear - whether you're in deep woods or just near tall buildings and trees, you can count on Oregon to help you find your way when you need it the most. Both durable and waterproof, Oregon 400t is built to withstand the elements. Bumps, dust, dirt, humidity and water are no match for this rugged navigator. The Oregon GPS is easy to use and maintain, powered by two AA batteries . A latch releases the back panel to expose the battery and memory slot compartment. Insert a microSD card and some batteries and this highly mobile GPS is ready to go anywhere

Microsoft Streets and Trips 2009 with GPS Locator DVD

You know where you want to go, and Microsoft Streets & Trips 2008 with GPS can help you get there. This best-selling travel and map software has been enhanced with several new features that take all the guesswork out of traveling, letting you focus on the sites, instead of the directions. With more than 1.6 million points of interest to choose from, and with updated maps and the most extensive trip planning features you'll find anywhere, Streets & Trips will help you plan your trip, your way. This package of software and GPS Locator is the ultimate travel tool for anyone who wants to plan a memorable vacation, or simply needs to find their way around town without a hitch.

It starts with all the door-to-door routing, location-finding and comprehensive mapping power of Microsoft Streets and Trips, and adds a plug-and-play Global Positioning System (GPS) locator that helps you stay on track and always knows where your next turn is. Combining the power and versatility of a GPS receiver with mapping information that is pinpoint accurate and easy-to-use, Streets & Trips 2008 with GPS Locator is the essential travel tool.

It starts with all the door-to-door routing, location-finding and comprehensive mapping power of Microsoft Streets and Trips, and adds a plug-and-play Global Positioning System (GPS) locator that helps you stay on track and always knows where your next turn is. Combining the power and versatility of a GPS receiver with mapping information that is pinpoint accurate and easy-to-use, Streets & Trips 2008 with GPS Locator is the essential travel tool.

What is The Practical Uses of Global Positioning Systems?

These are some of the ways that GPS is used in the "real world" for a variety of different purposes. In this lens we feature the "best of the breed" products in these GPS use categories

uses of gps technology

1. GeoCaching: High Tech Treasure Hunting: Geocaching takes treasure hunting one step further by using GPS units to located hidden treasures. The name of the game is a combination of "Geo" from geography and "Caching" from the action of hiding the cache (or treasure). The term cache is commonly used among hikers and campers to refer to a hidden supply of food or other provisions.

2. Personal Emergencies: GPS equipped cell phones can transmit precise locations to 911 dispatchers. This allows the dispatcher to have an immediate and accurate location instead of relying upon descriptions of people who may be unfamiliar with the area or too distraught to explain their location. The same technology has also helped catch people who make crank 911 calls from their GPS-enabled cell phone.

3. Recreational Uses: GPS is popular among hikers, hunters, snowmobilers, mountain bikers, and cross-country skiers. rails can be viewed on topographic map software, traced on the screen and that route can be downloaded into the GPS. Then, in the field the route can be displayed in the map window of the GPS and easily followed. Another use is to leave the GPS on and in a mesh pocket of your backpack while hiking. This will allow the GPS to record a tracklog of your hike. The tracklog can be shared with others, plotted on maps, used for distance hiked estmiates and even printed with mapping software and shared with friends

4. Emergency Roadside Assistance

OnStar by GM for example uses GPS to track vehicles' whereabouts and allows motorists to summon emergency help or to ask directions.

5. Tracking Your Kids: GPS-enabled cell phones can be used to monitor your kids. When Johnny calls you for his after school check in you can confirm if his coordinates are at the public library or at the pool ha

6. Photography: Geocoding Photos Some cameras will annotate your digital and traditional images with the date and time, however, new GPS-enabled cameras can also annotate them with precision coordinates which mark the location of your photos. These annotations can be very useful in science field work, real estate, law enforcement and many other areas.

7. Automobiles and Driving: GPS tracking devices can be used to navigate your car through traffic and find your way to any destination. Many Auto GPS Systems come with preloaded destination settings or points of interest (POI) that provide directions to attractions, and important pit stops such as gas stations, hotels, motels, and lodges. For vacation travel, or any other time you need to map out a course for traveling, the system has course-planning functionality.

8. Laptop GPS: Laptop GPS receivers turn any mobile computer into a fully functional GPS System. Save money and use a laptop GPS receiver for in-vehicle, office based meetings to track locations and find directions or simply to plan a cycle ride for the next day. The in built sophistication of the laptop, augmented with navigation and mapping programs provides much more extensive information than most handheld applications. By using laptop GPS navigation, you are using an already familiar tool, with no need to learn new hardware functionality. Laptop GPS receivers are simply attached to your laptop, notebook or utlrasmall computer using USB or serial connections as with many other peripheral devices. Quite often bundled with software, they are very effective GPS systems

uses of gps technology

1. GeoCaching: High Tech Treasure Hunting: Geocaching takes treasure hunting one step further by using GPS units to located hidden treasures. The name of the game is a combination of "Geo" from geography and "Caching" from the action of hiding the cache (or treasure). The term cache is commonly used among hikers and campers to refer to a hidden supply of food or other provisions.

2. Personal Emergencies: GPS equipped cell phones can transmit precise locations to 911 dispatchers. This allows the dispatcher to have an immediate and accurate location instead of relying upon descriptions of people who may be unfamiliar with the area or too distraught to explain their location. The same technology has also helped catch people who make crank 911 calls from their GPS-enabled cell phone.

3. Recreational Uses: GPS is popular among hikers, hunters, snowmobilers, mountain bikers, and cross-country skiers. rails can be viewed on topographic map software, traced on the screen and that route can be downloaded into the GPS. Then, in the field the route can be displayed in the map window of the GPS and easily followed. Another use is to leave the GPS on and in a mesh pocket of your backpack while hiking. This will allow the GPS to record a tracklog of your hike. The tracklog can be shared with others, plotted on maps, used for distance hiked estmiates and even printed with mapping software and shared with friends

4. Emergency Roadside Assistance

OnStar by GM for example uses GPS to track vehicles' whereabouts and allows motorists to summon emergency help or to ask directions.

5. Tracking Your Kids: GPS-enabled cell phones can be used to monitor your kids. When Johnny calls you for his after school check in you can confirm if his coordinates are at the public library or at the pool ha

6. Photography: Geocoding Photos Some cameras will annotate your digital and traditional images with the date and time, however, new GPS-enabled cameras can also annotate them with precision coordinates which mark the location of your photos. These annotations can be very useful in science field work, real estate, law enforcement and many other areas.

7. Automobiles and Driving: GPS tracking devices can be used to navigate your car through traffic and find your way to any destination. Many Auto GPS Systems come with preloaded destination settings or points of interest (POI) that provide directions to attractions, and important pit stops such as gas stations, hotels, motels, and lodges. For vacation travel, or any other time you need to map out a course for traveling, the system has course-planning functionality.

8. Laptop GPS: Laptop GPS receivers turn any mobile computer into a fully functional GPS System. Save money and use a laptop GPS receiver for in-vehicle, office based meetings to track locations and find directions or simply to plan a cycle ride for the next day. The in built sophistication of the laptop, augmented with navigation and mapping programs provides much more extensive information than most handheld applications. By using laptop GPS navigation, you are using an already familiar tool, with no need to learn new hardware functionality. Laptop GPS receivers are simply attached to your laptop, notebook or utlrasmall computer using USB or serial connections as with many other peripheral devices. Quite often bundled with software, they are very effective GPS systems

Magellan Maestro 4210 Portable Automotive GPS System

The more reasonably priced but top-of-the-line GPS is the ultra thin Magellan Maestro 4250. The Maestro comes with a 4.3-inch wide screen display, a built-in traffic receiver for live updates, and an enormous ix-million points of interest (POI) database built-in. It also t integrates with the AAA TourBook to display listings of restaurants and accommodations, local attractions, approved auto repair shops, AAA office locations, and more. This high-end model also offers text-to-speech functionality and a Bluetooth phone interface. Perhaps the most notable feature is voice command, which you can use to control many of its functions hands-free.

The Maestro 4050 let you control many of the functions with your voice. On the 4250, Voice Command works even better considering that you don't have to train the device. In fact, using Voice Command becomes quite addictive. Without an active route, the Voice Command menu can help you find the nearest coffee shop, restaurant, gas station, or ATM; navigate Home; or enter the roadside assistance menu, just to mention a few options. While not as brilliant and full-featured as the Garmin speech system, it does the job.

It also features a Bluetooth interface and pairs quickly but lacks some of the advanced features found in the Garmin such as downloading and using stored contacts from your mobile phone.

However, he most robust feature of the Maestro is its enormous database and up to date maps. Unlike other devices, all of the surrounding features are there including the newest bridges and roads. This is Magellan's top of the line GPS despite the recent of the introduction of the 5310 which has a larger screen but less features.

The Maestro 4050 let you control many of the functions with your voice. On the 4250, Voice Command works even better considering that you don't have to train the device. In fact, using Voice Command becomes quite addictive. Without an active route, the Voice Command menu can help you find the nearest coffee shop, restaurant, gas station, or ATM; navigate Home; or enter the roadside assistance menu, just to mention a few options. While not as brilliant and full-featured as the Garmin speech system, it does the job.

It also features a Bluetooth interface and pairs quickly but lacks some of the advanced features found in the Garmin such as downloading and using stored contacts from your mobile phone.

However, he most robust feature of the Maestro is its enormous database and up to date maps. Unlike other devices, all of the surrounding features are there including the newest bridges and roads. This is Magellan's top of the line GPS despite the recent of the introduction of the 5310 which has a larger screen but less features.

Garmin 885/885T Bluetooth Automotive GPS System w/ MSN Direct & Voice Recognition

Garmin is currently number 1 among all GPS manufacturers among reviewers. The nüvi 885/885T is Garmin's new top-of-the-line automotive GPS and has been chosen Number 1 by the highly regarded Consumer Reports.

This state-of-the-art GPS device features a 4.3-inch, 480-by-272-pixel, WQVGA resolution screen. Its navigation is simplicity itself using am easy-to-navigate main menu with large icons for Where To and View Map. Two smaller icons at the bottom of the screen provide access to the volume controls, as well as the tools menu. If you've paired the device with a compatible Bluetooth phone, a phone icon also appears on the main screen.

There's built-in support for Microsoft's MSN Direct service. Available from the Tools menu or the Where To screen, MSN Direct provides access to a host of useful information, such as live traffic, weather, movie times, news, stock information, local events, and even gas prices at nearby service stations.

The nüvi 880 also has a number of useful built-in, travel tools, including currency and unit of measure converters, a four-zone world clock, a four-function calculator, and 11 games. You also get a music player, an Audible audiobook player, and a picture viewer. The Where Am I tool gives you your exact latitude, longitude, nearest street address, and current intersection. YOu get one-tap directions to nearby hospitals, police, and gas stations.

What makes this device special is it's sophisticated speech recognition. Garmin takes a different approach from other GPS manufacturers, however. the Magellan Maestro for example, activates its voice command mode when it hears the keyword Magellan. Unfortunately it often misinterprets passenger conversation triggering the its voice command mode. Garmin solves this problem by giving the 880 a small wireless remote designed to be strapped to the steering wheel. A large push-to-talk button on the remote puts the nüvi 880 into Listen mode, whereupon a green icon appears in the upper right-hand corner of the screen when it's your turn to speak. A red icon tells you that the device isn't accepting speech input at that moment, or didn't recognize what you've said. The smaller button on the remote takes the navigator out of Listen mode. Alternatively, after about 10 seconds of silence, the speech icon disappears and the device reverts back to normal touch-screen function.

The speech recognition abilities of the Garmin are uncanny. For those of you who use Dragon NaturallySpeaking software on your PC and have to dictate carefully in a noiseless room for any reasonable kind of accuracy probably wonder how a GPS with all of the street noise can figure out where your going. But all you need to do is activate push-to-talk button, speak your "Where to" in your usual conversational manner, and the Garmin will promote you for additional answers until it finds out what it needs. Its amazingly better as far as accuracy with picking up numbers as compared to the aforementioned NaturallySpeaking Software.

The Garmin nüvi 880 is a the best general travel GPS on the market. Features of this luxury device, including text-to-speech conversion, multisegment routing, Bluetooth plus media players and travel tools. The Garmin nüvi 880 uncannily good speech recognition blows away the competition and seals the deal.

This state-of-the-art GPS device features a 4.3-inch, 480-by-272-pixel, WQVGA resolution screen. Its navigation is simplicity itself using am easy-to-navigate main menu with large icons for Where To and View Map. Two smaller icons at the bottom of the screen provide access to the volume controls, as well as the tools menu. If you've paired the device with a compatible Bluetooth phone, a phone icon also appears on the main screen.

There's built-in support for Microsoft's MSN Direct service. Available from the Tools menu or the Where To screen, MSN Direct provides access to a host of useful information, such as live traffic, weather, movie times, news, stock information, local events, and even gas prices at nearby service stations.

The nüvi 880 also has a number of useful built-in, travel tools, including currency and unit of measure converters, a four-zone world clock, a four-function calculator, and 11 games. You also get a music player, an Audible audiobook player, and a picture viewer. The Where Am I tool gives you your exact latitude, longitude, nearest street address, and current intersection. YOu get one-tap directions to nearby hospitals, police, and gas stations.

What makes this device special is it's sophisticated speech recognition. Garmin takes a different approach from other GPS manufacturers, however. the Magellan Maestro for example, activates its voice command mode when it hears the keyword Magellan. Unfortunately it often misinterprets passenger conversation triggering the its voice command mode. Garmin solves this problem by giving the 880 a small wireless remote designed to be strapped to the steering wheel. A large push-to-talk button on the remote puts the nüvi 880 into Listen mode, whereupon a green icon appears in the upper right-hand corner of the screen when it's your turn to speak. A red icon tells you that the device isn't accepting speech input at that moment, or didn't recognize what you've said. The smaller button on the remote takes the navigator out of Listen mode. Alternatively, after about 10 seconds of silence, the speech icon disappears and the device reverts back to normal touch-screen function.

The speech recognition abilities of the Garmin are uncanny. For those of you who use Dragon NaturallySpeaking software on your PC and have to dictate carefully in a noiseless room for any reasonable kind of accuracy probably wonder how a GPS with all of the street noise can figure out where your going. But all you need to do is activate push-to-talk button, speak your "Where to" in your usual conversational manner, and the Garmin will promote you for additional answers until it finds out what it needs. Its amazingly better as far as accuracy with picking up numbers as compared to the aforementioned NaturallySpeaking Software.

The Garmin nüvi 880 is a the best general travel GPS on the market. Features of this luxury device, including text-to-speech conversion, multisegment routing, Bluetooth plus media players and travel tools. The Garmin nüvi 880 uncannily good speech recognition blows away the competition and seals the deal.

How GPS Works ?

When people talk about "a GPS," they usually mean a GPS receiver. The Global Positioning System (GPS) is actually a constellation of 27 Earth-orbiting satellites (24 in operation and three extras in case one fails). The U.S. military developed and implemented this satellite network as a military navigation system, but soon opened it up to everybody else.

Each of these 3,000- to 4,000-pound solar-powered satellites circles the globe at about 12,000 miles (19,300 km), making two complete rotations every day. The orbits are arranged so that at any time, anywhere on Earth, there are at least four satellites "visible" in the sky.,

A GPS receiver's job is to locate four or more of these satellites, figure out the distance to each, and use this information to deduce its own location. This operation is based on a simple mathematical principle called trilateration.GPS receiver calculates its position on earth based on the information it receives from four located satellites. This system works pretty well, but inaccuracies do pop up. For one thing, this method assumes the radio signals will make their way through the atmosphere at a consistent speed (the speed of light). In fact, the Earth's atmosphere slows the electromagnetic energy down somewhat, particularly as it goes through the ionosphere and troposphere. The delay varies depending on where you are on Earth, which means it's difficult to accurately factor this into the distance calculations. Problems can also occur when radio signals bounce off large objects, such as skyscrapers, giving a receiver the impression that a satellite is farther away than it actually is. On top of all that, satellites sometimes just send out bad almanac data, misreporting their own position.

Differential GPS (DGPS) helps correct these errors. The basic idea is to gauge GPS inaccuracy at a stationary receiver station with a known location. Since the DGPS hardware at the station already knows its own position, it can easily calculate its receiver's inaccuracy. The station then broadcasts a radio signal to all DGPS-equipped receivers in the area, providing signal correction information for that area. In general, access to this correction information makes DGPS receivers much more accurate than ordinary receivers.

Each of these 3,000- to 4,000-pound solar-powered satellites circles the globe at about 12,000 miles (19,300 km), making two complete rotations every day. The orbits are arranged so that at any time, anywhere on Earth, there are at least four satellites "visible" in the sky.,

A GPS receiver's job is to locate four or more of these satellites, figure out the distance to each, and use this information to deduce its own location. This operation is based on a simple mathematical principle called trilateration.GPS receiver calculates its position on earth based on the information it receives from four located satellites. This system works pretty well, but inaccuracies do pop up. For one thing, this method assumes the radio signals will make their way through the atmosphere at a consistent speed (the speed of light). In fact, the Earth's atmosphere slows the electromagnetic energy down somewhat, particularly as it goes through the ionosphere and troposphere. The delay varies depending on where you are on Earth, which means it's difficult to accurately factor this into the distance calculations. Problems can also occur when radio signals bounce off large objects, such as skyscrapers, giving a receiver the impression that a satellite is farther away than it actually is. On top of all that, satellites sometimes just send out bad almanac data, misreporting their own position.

Differential GPS (DGPS) helps correct these errors. The basic idea is to gauge GPS inaccuracy at a stationary receiver station with a known location. Since the DGPS hardware at the station already knows its own position, it can easily calculate its receiver's inaccuracy. The station then broadcasts a radio signal to all DGPS-equipped receivers in the area, providing signal correction information for that area. In general, access to this correction information makes DGPS receivers much more accurate than ordinary receivers.

Mandriva Linux 2010 RC2 available now

The last development version of Mandriva Linux 2010 RC2 (32 and 64 bit free versions) went live on Saturday, and is now available for testing from our FileForum.

Please note that this should not be used to upgrade stable installations of Mandriva Linux, as there are unresolved issues with windows managers such as KDE and GNOME which could conflict with previous versions. This pre-release edition is mainly for bug fixes, but the official public release of Mandriva Linux 2010 remains scheduled for November 3.

This candidate is especially noteworthy because Mandriva Linux 2010 will be the first 100% free Linux distribution to fully integrate Moblin (2.0), the Linux Foundation's mobile platform designed to run optimally on Intel's Atom chips. While it is designed specifically for netbooks and such, it can still be useful for quick and straightforward tasks.

This version replaces the Splashy boot screen with Red Hat's Plymouth, includes the Guest Account ability, and also upgrades its interfaces to include KDE 4.3.2, GNOME 2.28, and Xfce 4.6.1.

Please note that this should not be used to upgrade stable installations of Mandriva Linux, as there are unresolved issues with windows managers such as KDE and GNOME which could conflict with previous versions. This pre-release edition is mainly for bug fixes, but the official public release of Mandriva Linux 2010 remains scheduled for November 3.

This candidate is especially noteworthy because Mandriva Linux 2010 will be the first 100% free Linux distribution to fully integrate Moblin (2.0), the Linux Foundation's mobile platform designed to run optimally on Intel's Atom chips. While it is designed specifically for netbooks and such, it can still be useful for quick and straightforward tasks.

This version replaces the Splashy boot screen with Red Hat's Plymouth, includes the Guest Account ability, and also upgrades its interfaces to include KDE 4.3.2, GNOME 2.28, and Xfce 4.6.1.

Windows Vista Vs Windows 7 !! Which one is better???

As many veteran Windows users already know, the operating system doesn't actually boot to an "idle state" -- it's not DOS. Since that time, Iolo has been characterizing the time it stops its stopwatch as the time that the CPU is "fully usable," which seems rather nebulous.

The discussion over whether this means Win7 is slower was declared moot today by TG Daily's Andrew Thomas, who wrote, "Ladies and gentlemen of the jury: I put it to you that there are no occasions when the boot time of a PC is important in any way whatsoever."

Unfortunately, there is one situation where the boot time of a PC is important: It affects the public's perception of whether the PC is actually faster, and thus better. And as we have seen with Vista, an operating system that was by all scientific measures much more secure than Windows XP, the perception that it was less secure -- by virtue of its highly sensitive behavior -- was as bad, if not worse, from the public vantage point as being insecure to begin with.

The public at large typically perceives the boot sequence of a computer as the period of time between startup and the first moment they're asked to log in. Betanews tested that interval this afternoon using an external stopwatch, and our triple-boot test system: an Intel Core 2 Quad Q6600-based computer using a Gigabyte GA-965P-DS3 motherboard, an Nvidia 8600 GTS-series video card, 3 GB of DDR2 DRAM, and a 640 GB Seagate Barracuda 7200.11 hard drive. Both Vista and Win7 partitions are located on this same drive.

The interval we tested is between the pressing of Enter at the multi-boot selection screen, and the moment the login screen appears. While the tools I used for timing were an ordinary digital stopwatch and my eyeballs, I will gladly let everyone know that I use these same tools to test qualifications at the Indianapolis Motor Speedway, and my measurements vary from those at the Timing & Scoring booth usually by about 0.05 seconds.

In tests of what I'll call the "perceived boot interval" on the same machine, Windows 7 posted a five-boot average time of 24.214 seconds. Windows Vista, booting from the exact same machine and the exact same disk (just a different partition) posted an average of 36.262 seconds -- just about 50% slower. Exactly how much time is required for a Windows-based system to start idling down and doing relatively nothing -- the "fully usable" state that Iolo is looking for -- typically varies wildly depending on what drivers are installed, and what startup applications may be running. On a well-utilized XP-based system (and we have a truckload of those), that time may officially be never.

However, it's worth noting that in a separate test conducted by ChannelWeb's Samara Lynn this afternoon, she discovered that boot times for a system running Windows 7 with Iolo's System Mechanic software installed were typically slower than for the same system with System Mechanic not installed. This may be because System Mechanic was authored with Vista in mind -- which could explain a lot of things about Iolo's own evaluation.

The discussion over whether this means Win7 is slower was declared moot today by TG Daily's Andrew Thomas, who wrote, "Ladies and gentlemen of the jury: I put it to you that there are no occasions when the boot time of a PC is important in any way whatsoever."

Unfortunately, there is one situation where the boot time of a PC is important: It affects the public's perception of whether the PC is actually faster, and thus better. And as we have seen with Vista, an operating system that was by all scientific measures much more secure than Windows XP, the perception that it was less secure -- by virtue of its highly sensitive behavior -- was as bad, if not worse, from the public vantage point as being insecure to begin with.

The public at large typically perceives the boot sequence of a computer as the period of time between startup and the first moment they're asked to log in. Betanews tested that interval this afternoon using an external stopwatch, and our triple-boot test system: an Intel Core 2 Quad Q6600-based computer using a Gigabyte GA-965P-DS3 motherboard, an Nvidia 8600 GTS-series video card, 3 GB of DDR2 DRAM, and a 640 GB Seagate Barracuda 7200.11 hard drive. Both Vista and Win7 partitions are located on this same drive.

The interval we tested is between the pressing of Enter at the multi-boot selection screen, and the moment the login screen appears. While the tools I used for timing were an ordinary digital stopwatch and my eyeballs, I will gladly let everyone know that I use these same tools to test qualifications at the Indianapolis Motor Speedway, and my measurements vary from those at the Timing & Scoring booth usually by about 0.05 seconds.

In tests of what I'll call the "perceived boot interval" on the same machine, Windows 7 posted a five-boot average time of 24.214 seconds. Windows Vista, booting from the exact same machine and the exact same disk (just a different partition) posted an average of 36.262 seconds -- just about 50% slower. Exactly how much time is required for a Windows-based system to start idling down and doing relatively nothing -- the "fully usable" state that Iolo is looking for -- typically varies wildly depending on what drivers are installed, and what startup applications may be running. On a well-utilized XP-based system (and we have a truckload of those), that time may officially be never.

However, it's worth noting that in a separate test conducted by ChannelWeb's Samara Lynn this afternoon, she discovered that boot times for a system running Windows 7 with Iolo's System Mechanic software installed were typically slower than for the same system with System Mechanic not installed. This may be because System Mechanic was authored with Vista in mind -- which could explain a lot of things about Iolo's own evaluation.

Google: No Chrome OS event tomorrow, contrary to reports

A presentation that Google had scheduled for early tomorrow evening at its Mountain View offices entitled "Front End Engineering Open House" will be a discussion about the Google Chrome Web browser, and not a preview of Google Chrome OS as reported by multiple Web sites this afternoon, one example of which appears at this hyperlink.

"This is actually just a small recruiting event and we won't be talking about Chrome OS at all," the spokesperson told Betanews moments ago, "just one engineer talking about UI design for Google Chrome (the browser)." The implication that Chrome OS was the subject was chocked up as a "false alarm."

Google took down the registration page for the event due to heavy traffic in just the last few hours. It may very well already be booked solid.

A cottage industry in Chrome OS rumors has blossomed since last July, with a treasure trove of amateur Photoshop touch-ups purporting to depict the new netbook operating system, though showing very few real features beyond what's already been seen in Windows and the Chrome browser. One longs for the good old days when relatively convincing smuggled photographs passing themselves as Mac System 7 showed up in one's mailbox.

"This is actually just a small recruiting event and we won't be talking about Chrome OS at all," the spokesperson told Betanews moments ago, "just one engineer talking about UI design for Google Chrome (the browser)." The implication that Chrome OS was the subject was chocked up as a "false alarm."

Google took down the registration page for the event due to heavy traffic in just the last few hours. It may very well already be booked solid.

A cottage industry in Chrome OS rumors has blossomed since last July, with a treasure trove of amateur Photoshop touch-ups purporting to depict the new netbook operating system, though showing very few real features beyond what's already been seen in Windows and the Chrome browser. One longs for the good old days when relatively convincing smuggled photographs passing themselves as Mac System 7 showed up in one's mailbox.

BlackBerry Storm 2 vs. BlackBerry Storm

The major upgrades in the Storm 2 are the inclusion of Wi-Fi, the increase in storage, and the "next-gen" Surepress interface. Surepress was intended to mimic the click of a mouse with a touchscreen, where any contact with the screen would highlight selections, but actions only took place when the screen was pressed into and clicked. Unfortunately, with a single point of contact behind the screen, the original Surepress user experience was somewhat unrefined. Research in Motion is promising much better accuracy with the new Surepress, which has four points of contact instead of just one. The company says this gives the onscreen keyboard (especially the portrait mode keyboard, which was highly imprecise) better responsiveness, and should provide a more engaging touch experience.

The Storm 2 is going to launch later this month in the US (through Verizon), Canada, and the UK. In Canada, it's expected not only on Rogers Communications, but also BCE Inc. and Telus. In the UK, it's expected to arrive on Vodafone, where the device will reportedly be free with a two-year contract of at least £35 per month. American carriers have not yet disclosed how much the handset will be subsidized.

The Storm 2 is going to launch later this month in the US (through Verizon), Canada, and the UK. In Canada, it's expected not only on Rogers Communications, but also BCE Inc. and Telus. In the UK, it's expected to arrive on Vodafone, where the device will reportedly be free with a two-year contract of at least £35 per month. American carriers have not yet disclosed how much the handset will be subsidized.

Yet another case for backing up your data: Snow Leopard

The bug was actually discovered within a week of Snow Leopard's launch back in August, when users found that logging out of their account, into a "guest" account, and then back into their personal account would completely erase the content from their home drive (Documents, Movies, Pictures, Music, Sites).

Though the bug is now more than a month old, it's still claiming victims, as Apple's support forums show. However, Apple has yet to acknowledge the issue and the aforesaid conditions do not faithfully reproduce the bug.

The issue is thought to only affect users who had active guest accounts in Leopard (Mac OS X 10.5), and the only workaround currently is to disable guest login altogether.

Users who have succumbed to the bug are likely to permanently lose their data unless they have performed a backup, so users who have guest accounts and upgraded to Snow Leopard from Leopard are advised to back up their data immediately. Users with Time Machine running simply need to hold down the "C" key when booting their Mac, and then selecting Utilities > Restore from Backup in the event that this bug eliminates their data.

Though the bug is now more than a month old, it's still claiming victims, as Apple's support forums show. However, Apple has yet to acknowledge the issue and the aforesaid conditions do not faithfully reproduce the bug.

The issue is thought to only affect users who had active guest accounts in Leopard (Mac OS X 10.5), and the only workaround currently is to disable guest login altogether.

Users who have succumbed to the bug are likely to permanently lose their data unless they have performed a backup, so users who have guest accounts and upgraded to Snow Leopard from Leopard are advised to back up their data immediately. Users with Time Machine running simply need to hold down the "C" key when booting their Mac, and then selecting Utilities > Restore from Backup in the event that this bug eliminates their data.

Download Opera 10.1 Beta, First public Opera 10.1 beta competes against its predecessor for performance

At a time when performance and speed are more important to browser users than ever before, and when Web apps users need the best platform available, suddenly it's Opera Software that is having the most difficult time delivering. While Opera 10's "Turbo Mode" is intended to leverage the company's pre-rendering capabilities originally designed for the Opera Mini mobile browser, none of that matters with respect to raw JavaScript performance; and these days, Web browsers are essentially JavaScript engines with some markup on the side.

Today, the first builds of Opera 10.1 that officially bear the "Beta 1" label were released to the company's servers. Betanews tests on this latest build were not very promising, as its overall CRPI score outperforms that of the latest stable Opera 10 by less than one percentage point. In fact, scores for the first public beta fell in Vista actually fell below those of the latest stable Opera 10 by about 7%.

Opera 10 Beta 1 scores a CRPI of 6.35, which compares to a 6.30 score for the stable Opera 10 (which we retested in Vista), and a 6.56 score for the last Beta 1 preview build we tested last week.

Today, the first builds of Opera 10.1 that officially bear the "Beta 1" label were released to the company's servers. Betanews tests on this latest build were not very promising, as its overall CRPI score outperforms that of the latest stable Opera 10 by less than one percentage point. In fact, scores for the first public beta fell in Vista actually fell below those of the latest stable Opera 10 by about 7%.

Opera 10 Beta 1 scores a CRPI of 6.35, which compares to a 6.30 score for the stable Opera 10 (which we retested in Vista), and a 6.56 score for the last Beta 1 preview build we tested last week.

Google vs. Yahoo vs. Bing

This week, all three of the world's top general search engines touted the addition of deep links to their search results, although Google has been actively experimenting with deep links since this time last year. The basic premise is this: For Web pages that have named anchors above selected subsections -- for example, the search engine is capable of generating subheadings in its search results that link users directly to those subsections, or at least to subsections whose titles imply they may have some bearing upon the query.

The fact that deep links are now official features of Google, Yahoo, and Bing search may not be nearly as relevant today as the fact that all three services made their announcements almost in unison. It's an indication of an actual race going on in the search engine field, reminiscent of the horse-and-buggy days of the early '90s when Lycos and AltaVista were vying against Yahoo for search supremacy. This despite the fact that Yahoo is due to be utilizing search results generated by Microsoft's search engines Real Soon Now.

But is all this just more bluster? Now that there at least appears to be a race once again in online search functionality, are the major players merely vying for position in the consumer conscience, or are they really doing something in the laboratories? Betanews sought out to see whether we could run into any real-world examples of deep linking improving the quality of search results...knowing full well that since Web pages have evolved over time to become shorter, the use of named anchors has fallen out of favor along with Netscape Navigator.

Although we managed to uncover deep-linked pages through Google and Bing just by accident this week, it's in hunting for them intentionally that deep links seem to scatter like startled squirrels. We started with some purposefully general, broad queries that should stir up old, existing pages.

"Abraham Lincoln", for instance, turned up zero deeply-linked citations within the first 100 returned by Google. Yahoo, however, provided a deep-linked reference on page 1 to the Abraham Lincoln Bicentennial Commission entry on Wikipedia -- one of Yahoo's partner sites for its drill-down filtering feature. No more deeply-linked entries appeared in Yahoo's top 100, though. Bing also pulled up zero deeply-linked references in its top 100.

What if we refined the context -- would deep links crawl out from under the woodwork? For Google and Bing, no -- adding "Stephen Douglas" as a criterion, for instance, did precisely nothing to draw out any deeply-linked results. It's hard to imagine that, over the long years that pages have been published on the Web, no single page on the Lincoln/Douglas debates of 1858 ever used named anchors. It could, instead, be an indication that the anchors on those pages have yet to be indexed.

But what's this? Yahoo turned up one deeply-linked reference to the debates as item #1 in its search results, and another reference to Stephen Douglas as #5. Once again, though, both are from Wikipedia; and although Wikipedia's shallowly-linked reference to the debates was #3 on Google and #2 on Bing, Yahoo's high placement along with links to history books for sale on Amazon as #2 and #3 (including links to reviews, obviously as a favor to another Yahoo partner, Amazon) indicates that Yahoo's success on this test may not be by virtue of a highly reformed index, but instead a special arrangement with partners.

If Yahoo were as active in indexing its deep links as the Wikipedia results imply, then it would have attached deep links to other entries that it did turn up, from wiki-based systems such as this item on Stephen Douglas from Conservapedia.com -- #12 on Yahoo's list.

Which brings up an important point: As many recently published Web sites use open source Wiki software, which do generate named anchors for their subsections, there's really no excuse for search engines that profess to provide deep links to have avoided indexing them. Since we've seen occasional deeply-linked examples pop up from out of the blue on Google since September 2008, you would think at least Google would have had time to index such pages before declaring this part of its service officially live.

The fact that deep links are now official features of Google, Yahoo, and Bing search may not be nearly as relevant today as the fact that all three services made their announcements almost in unison. It's an indication of an actual race going on in the search engine field, reminiscent of the horse-and-buggy days of the early '90s when Lycos and AltaVista were vying against Yahoo for search supremacy. This despite the fact that Yahoo is due to be utilizing search results generated by Microsoft's search engines Real Soon Now.

But is all this just more bluster? Now that there at least appears to be a race once again in online search functionality, are the major players merely vying for position in the consumer conscience, or are they really doing something in the laboratories? Betanews sought out to see whether we could run into any real-world examples of deep linking improving the quality of search results...knowing full well that since Web pages have evolved over time to become shorter, the use of named anchors has fallen out of favor along with Netscape Navigator.

Although we managed to uncover deep-linked pages through Google and Bing just by accident this week, it's in hunting for them intentionally that deep links seem to scatter like startled squirrels. We started with some purposefully general, broad queries that should stir up old, existing pages.

"Abraham Lincoln", for instance, turned up zero deeply-linked citations within the first 100 returned by Google. Yahoo, however, provided a deep-linked reference on page 1 to the Abraham Lincoln Bicentennial Commission entry on Wikipedia -- one of Yahoo's partner sites for its drill-down filtering feature. No more deeply-linked entries appeared in Yahoo's top 100, though. Bing also pulled up zero deeply-linked references in its top 100.

What if we refined the context -- would deep links crawl out from under the woodwork? For Google and Bing, no -- adding "Stephen Douglas" as a criterion, for instance, did precisely nothing to draw out any deeply-linked results. It's hard to imagine that, over the long years that pages have been published on the Web, no single page on the Lincoln/Douglas debates of 1858 ever used named anchors. It could, instead, be an indication that the anchors on those pages have yet to be indexed.

But what's this? Yahoo turned up one deeply-linked reference to the debates as item #1 in its search results, and another reference to Stephen Douglas as #5. Once again, though, both are from Wikipedia; and although Wikipedia's shallowly-linked reference to the debates was #3 on Google and #2 on Bing, Yahoo's high placement along with links to history books for sale on Amazon as #2 and #3 (including links to reviews, obviously as a favor to another Yahoo partner, Amazon) indicates that Yahoo's success on this test may not be by virtue of a highly reformed index, but instead a special arrangement with partners.

If Yahoo were as active in indexing its deep links as the Wikipedia results imply, then it would have attached deep links to other entries that it did turn up, from wiki-based systems such as this item on Stephen Douglas from Conservapedia.com -- #12 on Yahoo's list.

Which brings up an important point: As many recently published Web sites use open source Wiki software, which do generate named anchors for their subsections, there's really no excuse for search engines that profess to provide deep links to have avoided indexing them. Since we've seen occasional deeply-linked examples pop up from out of the blue on Google since September 2008, you would think at least Google would have had time to index such pages before declaring this part of its service officially live.

Expect 22.8% performance boost from next week's Firefox 3.6 beta

The developers at Mozilla have set next week as the tentative rollout window for the first public beta of Firefox 3.6 -- the first edition of the organization's big fixes for 3.5 where it's accepting analysis and advice from the general public. Betanews tests this week on a late version of the 3.6 beta preview, close to the organization's planned code freeze, indicate that users will be visibly pleased by what they see: Generally faster JavaScript execution and much faster page rendering will result in a browser that's almost one-fourth faster than its predecessor -- by our estimate, 22.78% faster on average.

Betanews tested the latest available development and stable builds of all five brands of Web browser, on all three modern Windows platforms -- XP SP3, Vista SP2, and Windows 7 RTM. Once again, we threw the kitchen sink at them: our new and stronger performance benchmark suite, consisting of experiments in all facets of rendering, mathematics, control, and even geometry. Like before, we use a slow browser (Microsoft Internet Explorer 7, the previous version, running in Windows Vista SP2) as our 1.00 baseline, and we produce performance indices representing browsers' relative speed compared to IE7.

Averaging all three Windows platforms, the latest Beta 1 preview of Firefox 3.6 posts a Betanews CRPI score of 9.02 -- just better than nine times the performance of IE7. Compare this against a 7.34 score for the latest stable version of Firefox 3.5.3, and 7.81 for the latest daily build of the Firefox 3.5.4 bug fix.

Betanews tested the latest available development and stable builds of all five brands of Web browser, on all three modern Windows platforms -- XP SP3, Vista SP2, and Windows 7 RTM. Once again, we threw the kitchen sink at them: our new and stronger performance benchmark suite, consisting of experiments in all facets of rendering, mathematics, control, and even geometry. Like before, we use a slow browser (Microsoft Internet Explorer 7, the previous version, running in Windows Vista SP2) as our 1.00 baseline, and we produce performance indices representing browsers' relative speed compared to IE7.

Averaging all three Windows platforms, the latest Beta 1 preview of Firefox 3.6 posts a Betanews CRPI score of 9.02 -- just better than nine times the performance of IE7. Compare this against a 7.34 score for the latest stable version of Firefox 3.5.3, and 7.81 for the latest daily build of the Firefox 3.5.4 bug fix.

Not that Windows is any enclave of safety: Microsoft's biggest Patch Tuesday

A lot of the presentations at security (or perhaps more appropriately, "insecurity") conferences such as Black Hat are devoted to experiments or "dares" for hackers to break through some new version of digital security. After awhile, it gets to be like watching pre-schoolers daring one another to punch through ever-taller Lego walls. But in the midst of last July's briefings came at least one scientifically researched, carefully considered, and thoughtfully presented presentation: the result of a full-scale investigation by three engineers at a consultancy called Hustle Labs, demonstrating how the presumption of trust between browsers, their add-ons, and other code components can trigger the types of software failures that can become exploitable by malicious code.

Engineers Mark Dowd, Ryan Smith, and David Dewey are being credited today with shedding light on a coding practice by developers that leaves the door open for browser crashes. The discovery of specific instances where such a practice could easily become exploitable is the focus of the most critical of Microsoft's regular second-Tuesday-of-the-month patches -- arguably the biggest of 13 bulletins addressing a record 34 fixes.

Among today's fixes is one that specifically addresses a relatively new class of Web apps that use XAML, Microsoft's XML-based front-end layout language, instead of HTML for presenting the user with controls. The class of apps is currently being called XAML Browser Application, or XBAP (perhaps Microsoft should have it shut off just for the lousy acronym). Simply browsing to a maliciously crafted XBAP application could create an attack vector, says one of Microsoft's bulletins published this morning.

But that isn't actually the problem, but the symptom of a very serious problem uncovered by the Hustle Labs trio -- one that may generate several more security fixes in coming months. At the root of the problem is the fact that browser plug-ins and components external to browsers -- for instance, the components that tie browsers to the .NET Framework in order to run XBAP apps -- are given higher levels of trust than the browser itself. These days, trust levels are turned down on the browser to disable most any chance of a simple JavaScript deleting elements of the user's file system without authorization; but plug-ins are often given a medium level of trust simply because they must be interoperable with a component (the Web browser) that is outside of its own context.

So when a plug-in creates references to new components, a principle called transitive trust mandates that this medium-level trust be transferred to the new component. And when that new component is an instance of an ActiveX object, that new level of trust may mean that if the object causes an exception, the mess it leaves behind could have just enough privilege attributed to it to execute malicious code.

The mess itself, the Hustle Labs researchers illustrated last July at Black Hat (PDF available here), can be caused by a tricky data type that has raised my eyebrows since the early '90s when I witnessed its first demonstrations: It's the variant type, created to represent data that may be passed as a parameter between procedures or components, whose type may be unspecific or even unknown. The way Microsoft represents variants in memory is by pairing their type with their value as a single unit, and that type is actually a pointer to a structure. That structure may be a primary type, but most often in the case of COM components (known to Web users as ActiveX components), it's a complex object comprised of multiple data elements, assembled together like records in a database, often whose types may include other variants.

The problem with using variants, as Microsoft learned the hard way (more than once), is that when you're trying to secure the interfaces between components, the absence of explicit types makes it difficult to ensure that they're behaving within the rules. But as the researchers discovered, it's the security code itself -- what they call marshaling code, resurrecting language from COM's heyday -- that can actually cause a serious problem, a mess that leaves behind opportunities for exploitation.

"The most obvious mistake a control can make with regard to object retention is to neglect to add to the reference count of a COM object that it intends to retain," the trio writes. A reference count helps a control to maintain a handle to the object it's instantiated. But marshaling code, in an effort to provide security to the system, will also amend the reference count; and when it thinks the control is done with the object, it will decrement that count in turn. So if the control had any other plans for the object, it has to add its own reference count to the same object.

To make a (very) long story short, there often ends up being more pointers to the object than there are objects. And with medium-level privilege, those pointers can theoretically be exploited.

The case-in-point involves those nasty variants. Adding references to objects in memory often involves copying the objects themselves; and in the case of variants, there's a special function for that. But to know to use that special function, marshaling code would have to be aware that the objects being copied and re-referenced are variants; so instead, they resort to the tried-and-true memcpy() library function. That function is capable of copying the complex object, but in such a "shallow" way that it doesn't give a whit about whether the contents of the complex object are complex objects in themselves -- and since memcpy() predates COM by a few decades, it doesn't increment the reference count for new instances of included objects that are created in the process. A pointer to the new object exists, of course, but not the reference that COM requires.

So an ordinary memory cleanup routine could clean up the contents of the duplicated contained object, even though a component has designs on using that object later. As the group writes, "If the variant contains any sort of complex object, such as an IDispatch [a common COM object], a pointer to the object will be duplicated and utilized without adding an additional reference to the object. If the result of this duplicated variant is retained, the object being pointed to could be deleted, if every other instance of that object is released."

There are a multitude of similar examples in this research paper of essentially the same principle in action: a principle that points to a fundamental flaw in the way COM objects have been secured up to now. The Hustle Labs team takes Microsoft to task only at one point in the paper, and quite gently, for implementing Patch Tuesday fixes that tend to resort to using "killbits" in the Registry for turning off COM components and ActiveX controls impacted by this kind of vulnerability, instead of reworking the marshaling code to address the problem at a fundamental level.

So today's round of Patch Tuesday releases may go into more detail than just resorting to killbits -- in a few situations this week, new patches actually go into further depth at patching problems that were said to have been patched already, including with the GDI+ library. But it's an indication that independent researchers with conscientious goals are truly getting through.

'Amateur' Linux IBM mainframe failure blamed for stranding New Zealand flyers

"What seems strange about this incident is that they are blaming it on a generator failure during testing," stated California Data Center Design Group President Ron Hughes, whose organization was not responsible either for the data center's current design or the changeover. "If this failure did occur during testing, the question I would ask is why didn't the redundant generators assume the load or why didn't they just switch back to utility power."

Though Hughes has no specific knowledge of last Friday's incident, his insight does shed more light on the situation.

"A properly designed Tier 3 data center -- which is the minimum level required for any critical applications -- should have no single points of failure in its design. In other words, the failure of a single piece of equipment should not impact the customer," Hughes told Betanews. "A generator failure is a fairly common event, which is why we build redundancy into a system. In a Tier 3 data center, if you need one generator to carry the load, you install two. If you need two, you install three. This is described as N+1 redundancy. It allows you to have a failure without impacting your ability to operate...In a Tier 3 data center, it should take 2 failure events before the customer is impacted."

Though Hughes has no specific knowledge of last Friday's incident, his insight does shed more light on the situation.

"A properly designed Tier 3 data center -- which is the minimum level required for any critical applications -- should have no single points of failure in its design. In other words, the failure of a single piece of equipment should not impact the customer," Hughes told Betanews. "A generator failure is a fairly common event, which is why we build redundancy into a system. In a Tier 3 data center, if you need one generator to carry the load, you install two. If you need two, you install three. This is described as N+1 redundancy. It allows you to have a failure without impacting your ability to operate...In a Tier 3 data center, it should take 2 failure events before the customer is impacted."

Mac sales grow 11.8% as Apple takes 9.4% U.S. market share

Overall, Apple came in fourth for U.S. vendors, selling an estimated 1.64 million Macs stateside during the frame. That's up 11.8 percent from the 1.47 million the company sold in the same frame one year prior.

Last quarter, IDC reported that Apple shipped 1.2 million computers in the U.S., a year-over-year decline of 12.4 percent. That was good for a 7.6 percent stateside market share, in terms of shipments.

Overall, the U.S. PC market grew an estimated 2.5 percent. The bulk of that came from portable machines, as netbooks continue to grow in popularity, IDC said. In the U.S. in particular, consumers gravitated toward low-cost machines to save money on back to school purchases.

"Despite a continuing mix of gloom and caution on the economic front, the PC market continues to rebound quickly," said Loren Loverde, program director for IDC's Tracker Program. "The competitive landscape, including transition to portables, new and low-power designs, growth in retail and consumer segments, and the impact of falling prices are reflected in the gains by HP and Acer, as well as overall market growth."

Leading the domestic pack was HP, which held a 25.5 percent market share with 4.47 million PCs shipped. Close on its heels was Dell, which took 25 percent of the market, but was down 13.4 percent from a year prior. The second-largest computer maker sold 4.37 million units.

In third was Acer, which achieved staggering year-over-year growth of 48.3 percent. The netbook maker shipped an estimated 1.95 million units to take an 11.1 percent share of the US market, up from 1.31 million units during the year-ago quarter.

Behind Apple, in fifth place, was Toshiba, which shipped 1.43 million units during the frame, good for an 8.1 percent share. All other PC manufacturers combined accounted for 20.9 percent, with 3.66 million units shipped.

Last quarter, IDC reported that Apple shipped 1.2 million computers in the U.S., a year-over-year decline of 12.4 percent. That was good for a 7.6 percent stateside market share, in terms of shipments.

Overall, the U.S. PC market grew an estimated 2.5 percent. The bulk of that came from portable machines, as netbooks continue to grow in popularity, IDC said. In the U.S. in particular, consumers gravitated toward low-cost machines to save money on back to school purchases.

"Despite a continuing mix of gloom and caution on the economic front, the PC market continues to rebound quickly," said Loren Loverde, program director for IDC's Tracker Program. "The competitive landscape, including transition to portables, new and low-power designs, growth in retail and consumer segments, and the impact of falling prices are reflected in the gains by HP and Acer, as well as overall market growth."

Leading the domestic pack was HP, which held a 25.5 percent market share with 4.47 million PCs shipped. Close on its heels was Dell, which took 25 percent of the market, but was down 13.4 percent from a year prior. The second-largest computer maker sold 4.37 million units.

In third was Acer, which achieved staggering year-over-year growth of 48.3 percent. The netbook maker shipped an estimated 1.95 million units to take an 11.1 percent share of the US market, up from 1.31 million units during the year-ago quarter.

Behind Apple, in fifth place, was Toshiba, which shipped 1.43 million units during the frame, good for an 8.1 percent share. All other PC manufacturers combined accounted for 20.9 percent, with 3.66 million units shipped.

Windows 7 Vs MAC, The BIG War...

Windows 7 is simply Microsoft's best operating system ever. Mac fanboys should worry and circle together in defensive posture. Collectively, they're making a last stand against the PC giant. Please, please, boisterous Mac defenders, stand in the front lines and receive the first blows. You deserve them.

Mac market share actually means little to Microsoft, although it sure matters lots to Mac bloggers thumping for Apple. The little dog barks, but the big dog -- with its enormous market share -- has the bite. Windows 7 is a new set of teeth. (I'll explain what competitors really matter to Microsoft after the subhead.)

The Apple fanboy crowd snipes against Windows 7 and stresses the over-importance of all things Mac. Some of the assertions about Macs or Windows 7 sales are laughable, they're so ridiculous. For example, today, Apple 2.0 blogger Philip Elmer-DeWitt asked: "Will Windows 7 boost Apple sales?" Philip writes that "over the past decade, Mac shipments have grown with nearly every new Microsoft release," based on research released by Broadpoint AmTech yesterday.

That's not what the data shows. The biggest increase in Mac sales follows the release of the much-maligned Windows Vista. The data supports what is widely known: Vista was a Windows failure. As for the other spikes, supposedly associated with Windows releases, Occam's Razor dictates that something Apple did and not Microsoft affected Mac sales. For example, the chart shows a huge spike in Mac sales for second quarter 2000, noting that Microsoft released Windows 2000 on February 17. But it ignores something else. A day earlier, Apple unveiled new Power Macs, finally reaching the long-delayed 500MHz processing power milestone and setting off a sales surge.

Another date: Oct. 25, 2001, and the launch of Windows XP. The chart shows level Mac sales for the quarter and a substantial decline for the one following. In July 2001, Apple introduced dual-processor Power Macs, and accordingly there was a two quarter spike in Mac shipments. Philip asks a silly question, and the chart is more reasonably explained by Apple actions, not Microsoft's.

ssessing the Real Competition

Microsoft's competitive problems aren't Macs but:

* Windows XP

* Software pirates

* Netbooks

Windows XP. Windows 7's biggest competitor will be Windows XP, which runs on about 80 percent of PCs, according to combined analyst reports. Microsoft's first challenge will be getting XP users to move up to Windows 7. Mac market share was 7.6 percent in the United States in second quarter, according to IDC. (Gartner and IDC should release Q3 preliminary numbers in the next couple of days.)

Mac share is inconsequential to Microsoft compared to Windows XP. My prediction: Windows 7 will slow Mac share gains, which already declined over the last three quarters, according to both Gartner and IDC.

Software pirates. Collectively, software pirates pose the greatest competitive threat to Microsoft, next to Windows XP. Not Macs. Certainly not PCs running Linux. According to Business Software Alliance, software piracy rates are highest in emerging markets, which also are where potential Windows PC adoption is greatest: 85 percent in Latin America, 66 percent in Central and Eastern Europe and 61 percent in Asia Pacific. By comparison, piracy rate in North Americs is 21 percent and 35 percent in the European Union. So in Latin America, more than 8 out of 10 copies of software in use are stolen.

Here's a loaded question: How many people among the Betanews community use software for which they didn't pay for, even though the developer charges something? Anyone care to respond in comments? Maybe this is easier: What do you feel you should have to pay for software?

More significantly, software piracy creates competitive opportunities for Macs, because of the damage done to the Windows brand. In a report released last week, BSA revealed a direct correlation between online software piracy at torrents and other file-trading sites and malware infections. Countries with high online piracy rates also have high malware infection rates. Related, 25 percent of a sampling of 98 sites distributing pirated software or digital content also contained malware. Where does the blame fall when PCs are infected with viruses? On Microsoft and Windows, which tarnishes the brand and helps foster popular folklore that Macs are more secure than Windows. Macs have problems, too, but they're not always as well publicized.

Netbooks. Microsoft also faces more competitive threat from netbooks, which are gobbling up Windows margins at an alarming rate. Microsoft makes substantially less on each Windows XP Home license shipping on netbooks than it does on either Windows Vista Home Basic or Premium.

During second calendar quarter, when Windows Client revenue fell 29 percent year over year and income declined 33 percent, netbooks made up 11 percent of PC sales, according to Microsoft. Microsoft Chief Financial Officer Chris Liddell acknowledged that the increase in lower-margin consumer Windows licenses -- fed in part by increasing netbook demand -- contributed to declines.

Last week, DisplaySearch updated second calendar quarter PC shipment data. DisplaySearch concluded that netbooks accounted for 22.2 percent of overall PC sales and 11.7 percent of revenues. Netbook sales jumped a staggering 264 percent year over year, while overall laptop sales (without counting netbooks) declined 14 percent.

Meanwhile, increased netbook sales exerted ever greater pull downward on average selling prices. Laptop ASPs fell to $688 in second quarter from $704 in first quarter and $849 in Q2 2008. Netbook prices fell to $361 from $371 and $506, respectively, during the same time period. Microsoft has lots more to worry about than Macs, particularly netbook cannibalization of the PC market and the negative impact on Windows margins.

Mac market share actually means little to Microsoft, although it sure matters lots to Mac bloggers thumping for Apple. The little dog barks, but the big dog -- with its enormous market share -- has the bite. Windows 7 is a new set of teeth. (I'll explain what competitors really matter to Microsoft after the subhead.)

The Apple fanboy crowd snipes against Windows 7 and stresses the over-importance of all things Mac. Some of the assertions about Macs or Windows 7 sales are laughable, they're so ridiculous. For example, today, Apple 2.0 blogger Philip Elmer-DeWitt asked: "Will Windows 7 boost Apple sales?" Philip writes that "over the past decade, Mac shipments have grown with nearly every new Microsoft release," based on research released by Broadpoint AmTech yesterday.

That's not what the data shows. The biggest increase in Mac sales follows the release of the much-maligned Windows Vista. The data supports what is widely known: Vista was a Windows failure. As for the other spikes, supposedly associated with Windows releases, Occam's Razor dictates that something Apple did and not Microsoft affected Mac sales. For example, the chart shows a huge spike in Mac sales for second quarter 2000, noting that Microsoft released Windows 2000 on February 17. But it ignores something else. A day earlier, Apple unveiled new Power Macs, finally reaching the long-delayed 500MHz processing power milestone and setting off a sales surge.

Another date: Oct. 25, 2001, and the launch of Windows XP. The chart shows level Mac sales for the quarter and a substantial decline for the one following. In July 2001, Apple introduced dual-processor Power Macs, and accordingly there was a two quarter spike in Mac shipments. Philip asks a silly question, and the chart is more reasonably explained by Apple actions, not Microsoft's.

ssessing the Real Competition

Microsoft's competitive problems aren't Macs but:

* Windows XP

* Software pirates

* Netbooks

Windows XP. Windows 7's biggest competitor will be Windows XP, which runs on about 80 percent of PCs, according to combined analyst reports. Microsoft's first challenge will be getting XP users to move up to Windows 7. Mac market share was 7.6 percent in the United States in second quarter, according to IDC. (Gartner and IDC should release Q3 preliminary numbers in the next couple of days.)

Mac share is inconsequential to Microsoft compared to Windows XP. My prediction: Windows 7 will slow Mac share gains, which already declined over the last three quarters, according to both Gartner and IDC.

Software pirates. Collectively, software pirates pose the greatest competitive threat to Microsoft, next to Windows XP. Not Macs. Certainly not PCs running Linux. According to Business Software Alliance, software piracy rates are highest in emerging markets, which also are where potential Windows PC adoption is greatest: 85 percent in Latin America, 66 percent in Central and Eastern Europe and 61 percent in Asia Pacific. By comparison, piracy rate in North Americs is 21 percent and 35 percent in the European Union. So in Latin America, more than 8 out of 10 copies of software in use are stolen.

Here's a loaded question: How many people among the Betanews community use software for which they didn't pay for, even though the developer charges something? Anyone care to respond in comments? Maybe this is easier: What do you feel you should have to pay for software?

More significantly, software piracy creates competitive opportunities for Macs, because of the damage done to the Windows brand. In a report released last week, BSA revealed a direct correlation between online software piracy at torrents and other file-trading sites and malware infections. Countries with high online piracy rates also have high malware infection rates. Related, 25 percent of a sampling of 98 sites distributing pirated software or digital content also contained malware. Where does the blame fall when PCs are infected with viruses? On Microsoft and Windows, which tarnishes the brand and helps foster popular folklore that Macs are more secure than Windows. Macs have problems, too, but they're not always as well publicized.

Netbooks. Microsoft also faces more competitive threat from netbooks, which are gobbling up Windows margins at an alarming rate. Microsoft makes substantially less on each Windows XP Home license shipping on netbooks than it does on either Windows Vista Home Basic or Premium.

During second calendar quarter, when Windows Client revenue fell 29 percent year over year and income declined 33 percent, netbooks made up 11 percent of PC sales, according to Microsoft. Microsoft Chief Financial Officer Chris Liddell acknowledged that the increase in lower-margin consumer Windows licenses -- fed in part by increasing netbook demand -- contributed to declines.

Last week, DisplaySearch updated second calendar quarter PC shipment data. DisplaySearch concluded that netbooks accounted for 22.2 percent of overall PC sales and 11.7 percent of revenues. Netbook sales jumped a staggering 264 percent year over year, while overall laptop sales (without counting netbooks) declined 14 percent.

Meanwhile, increased netbook sales exerted ever greater pull downward on average selling prices. Laptop ASPs fell to $688 in second quarter from $704 in first quarter and $849 in Q2 2008. Netbook prices fell to $361 from $371 and $506, respectively, during the same time period. Microsoft has lots more to worry about than Macs, particularly netbook cannibalization of the PC market and the negative impact on Windows margins.

Wi-Fi Vs Bluetooth

Imagine a wireless home network where devices communicate directly with one another instead of through the wireless router -- a sort of mesh network without the need to switch to ad hoc mode. Today the Wi-Fi Alliance announced it has almost completed the standard which could make these a reality: Wi-Fi Direct.

Wi-Fi Direct was known as "Wi-Fi Peer-to-Peer," and has repeatedly been referred to in IEEE meetings as a possible "Bluetooth Killer." By means of this standard, direct connections between computers, phones, cameras, printers, keyboards, and future classes of components are established over Wi-Fi instead of another wireless technology governed by a separate standard.

Even though the 2.4 GHz and 5 GHz bands are often dreadfully overcrowded in home networks, the appeal of such a standard is twofold: Any certified Wi-Fi Direct device will be able to communicate directly with any legacy Wi-Fi devices without the need for any new software on the legacy end, and transfer rates will be the same as infrastructure connections, thoroughly destroying Bluetooth. The theoretical maximum useful data transfer for Bluetooth 2.0 is 2.1 Mbps, while 802.11g has a theoretical maximum throughput of 54 Mbps.

"Wi-Fi Direct represents a leap forward for our industry. Wi-Fi users worldwide will benefit from a single-technology solution to transfer content and share applications quickly and easily among devices, even when a Wi-Fi access point isn't available. The impact is that Wi-Fi will become even more pervasive and useful for consumers and across the enterprise," Wi-Fi Alliance executive director Edgar Figueroa said in a statement today.

Wi-Fi Direct was known as "Wi-Fi Peer-to-Peer," and has repeatedly been referred to in IEEE meetings as a possible "Bluetooth Killer." By means of this standard, direct connections between computers, phones, cameras, printers, keyboards, and future classes of components are established over Wi-Fi instead of another wireless technology governed by a separate standard.